library(reticulate)

virtualenv_create("ppa_site_env", packages = c("pandas", "numpy", "openai"))

# you would likely replace the config::get() call with just your API Key, I store mine in a config file.

Sys.setenv(OPENAI_API_KEY=config::get()$openai)If you spend any time on the R subreddits or forums you’ve likely encountered some variation of a post where a newer user is asking how to perform a task in R, and somebody comes along and says “you should just do it in Python.” That’s not particularly helpful advice for somebody who wants to do a thing in R, and the biggest difference between the two languages really comes down to (most of the time) how you want to think about the problem while you’re solving it. With that in mind, know I’m on your side when I say: I recommend making peace with the official chatGPT Python SDK to make your API calls. While there are plenty of R packages written to interface with chatGPT, they go out of date quickly as the API is very, very rapidly growing and adapting. My goal in this post is to demonstrate using reticulate to borrow the python SDK’s API functionality and send the results back for the actual analysis work to be done in R. We’re going to use sentiment analysis as a means of investigating this workflow.

Setting Up Your Environment

I’m going to take the easy way out from a writing standpoint, and send you to the reticulate documentation to set up your python environment. The important thing is that you want to install openai via pip, and you want to set your API token as an environment variable. Starting from scratch in an R project, that would look like so:

The Client Object

For those who are primarily familiar with R and not as much with Python, it’s helpful to understand a key difference in how object-oriented programming (OOP) is approached in these two languages.

In R, particularly with its S3 object system, you assign a class to a variable and this class determines how functions interact with it. For instance, when you create a dataframe (x = data.frame(…)), it inherits methods specific to dataframes. You interact with it using functions that are designed to work with this class, like subsetting (x[[“column”]] or x[“row”, “column”]), printing (print(x)), or things like (subset()). In R’s OOP, methods are more like functions in the global environment that behave differently based on the class of their arguments.

Python, on the other hand, adopts a more integrated approach to OOP. Here, classes define objects that encapsulate both data and the functions (methods) that operate on that data. When you create an object of a certain class in Python, it comes with methods (functions that are part of the object) and attributes (data that is part of the object). For example, if you create a list (x = [1, 2, 3]), it has methods like x.append(4) that are part of the list object itself. This is a key distinction: in Python, the methods are part of the objects, not separate functions that change behavior based on the object type.

So while a lot of R code might look like:

x = 1:3

x = append(x, 4)

x[1] 1 2 3 4a more pythonic approach is like:

x = [1, 2, 3]

x.append(4)

x[1, 2, 3, 4]And so the python openai sdk is an object that once instantiated, you use the methods therein to work with. For our purposes, we’re going to use the chat completions to make function calls asking for analysis of text. I recommend visiting the official repo for documentation, etc. Let’s start by creating our client object.

# you may want to be sure your api key is in fact available to the python instance

# import os

# os.environ["OPENAI_API_KEY"]

from openai import OpenAI

client = OpenAI()From here we can create completions, etc.

chat_completion = client.chat.completions.create(

model = "gpt-4",

messages = [

{

"role": "user",

"content": "Say this is a test"

}

]

)

chat_completion.choices[0].message.content'This is a test.'And we can continue on with the completions by appending the content to the client messages. If you’re having an actual converstation with chatGPT, you’re going to want to save your messages in an extrenal list and reference that instead of retyping the previous message over and over again. An example is given here:

# Initialize conversation history

conversation_history = []

# Function to add a message and get a response

def chat_with_openai(user_message):

# Add user message to history

conversation_history.append({"role": "user", "content": user_message})

# Get response from OpenAI

chat_completion = client.chat.completions.create(

model="gpt-4",

messages=conversation_history

)

# Extract the response and add to history

assistant_message = chat_completion.choices[0].message.content

conversation_history.append({"role": "assistant", "content": assistant_message})

return assistant_message

# Example usage

first_response = chat_with_openai("What is the capital of France?")

first_response'The capital of France is Paris.'second_response = chat_with_openai("Tell me more about where that is?")

second_response'Paris is located in the north-central part of France. It is situated along the Seine River, in the heart of the Île-de-France region. Paris is approximately 450 kilometers southeast of London, UK, and around 300 kilometers northeast of Nantes, a city on the French Atlantic coast. Known as the "City of Light", Paris is famed for its historical monuments, museums, vibrant culture, fashion, and gastronomy. Some of its most iconic landmarks include the Eiffel Tower, the Louvre Museum, the Cathedral of Notre Dame, and the Champs-Élysées.'Functions

We don’t really want to have a back and forth for our text analysis. In a perfect scientific world, each text we examine in a sentiment analysis would be isolated completely from all other data points to reduce bias. As such, we essentially want a new chat for each data point (corpus) that we’re working with. So we can dispense with the back and forth. But we do need a mean of normalizing responses, and making them easily digestible. Enter functions. In chatGPT functions are basically a request to respond in a structured json format. Functions are defined in the completions method just like messages and the model. I recommend saving your function as an external object.

tools = [

{

"type": "function",

"function": {

"name": "analyze_text",

"description": "Provide a sentiment analysis of provided text.",

"parameters": {

"type": "object",

"properties": {

"sentiment": {

"type": "number",

"description": "Number of precision 0.01. 0 is a negative sentiment, 1 is positive."

}

},

"required": ["sentiment"]

}

}

}

]

sentiment_completion = client.chat.completions.create(

model = "gpt-4",

tools = tools,

tool_choice = {"type": "function", "function": {"name": "analyze_text"}},

messages = [{"role": "user", "content": "I literally could not be any happier right now!"}]

)

sentiment_completion.choices[0].message.tool_calls[0].function.arguments'{\n "sentiment": 1\n}'So let’s make that a function we can work with easily from reticulate’s python interface in R.

import json

def return_sentiment(corpus):

sentiment_completion = client.chat.completions.create(

model = "gpt-4",

tools = tools,

tool_choice = {"type": "function", "function": {"name": "analyze_text"}},

messages = [{"role": "user", "content": corpus}]

)

resp = sentiment_completion.choices[0].message.tool_calls[0].function.arguments

resp = json.loads(resp)['sentiment']

return resp

return_sentiment("I am so uinbelievably sad right now") 0.1And now to run this bad boy from R. We’re going to borrow some reviews and create a dataframe from them, then call the sentiment analysis and see if it resembles the stars users assigned their reviews:

stars = c(1, 5, 2, 1)

reviews = c(

r"(Strolled in Saturday afternoon when they weren’t busy. Immediately was seated and provided a glass of water and that’s where anything resembling service or hospitality ended. 30 minutes goes by during which I drank my water and waited, no one came by to take my order or refill my water. Ended up just walking out.)"

, r"(I've never had a negative experience at Casa. The portions are filling and the food is always delicious. They also have a very good variety of vegetarian options. What really separates casa from other similar style restaurants is its welcoming and friendly atmosphere. The staff are warm and welcoming, and treat you like family. I'm looking forward for my next trip down to get a burrito among good company!)"

, r"(Overpriced and not Mexican food. The food is Mexican adjacent at best and the queso is very thick Chile-con-queso. We paid $40 after getting a (surprisingly complicated to receive) comp on the queso we didn't eat because it was not properly advertised. The food is not bad if you're looking for something home cooked quality with a nice bar and environment But if you want authentic Mexican food there are other options around.

~(requested explanation from business) We believed the restaurant was authentic Mexican and asked for queso when we were waited on. The only "fix" I can think of would be to train your wait staff to give a quick explanation of the kind of queso you have before bringing it out. The wait staff was very nice and professional)"

, r"(Food should be baked on wax paper. They bake the tray so that customers burn themselves trying to unstick the food.)"

)

casa_reviews = data.frame(stars = stars, reviews = reviews)

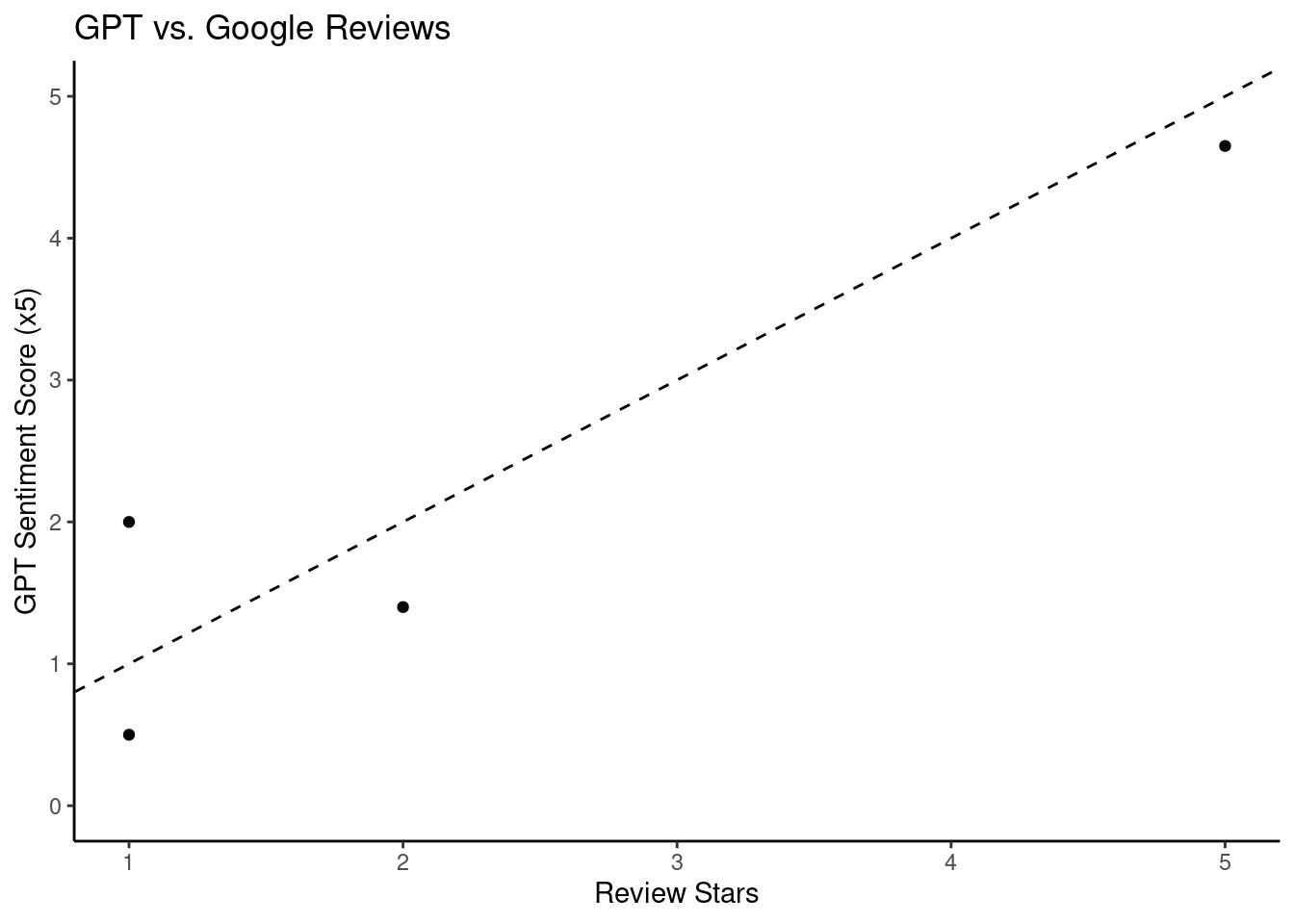

casa_reviews$gpt_score = sapply(casa_reviews$reviews, py$return_sentiment)And we can plot the results to see if they match expectations:

library(ggplot2)

casa_reviews |>

ggplot(aes(x = stars, y = gpt_score*5)) +

geom_point() +

geom_abline(slope = 1, intercept = 0, linetype = 2) +

coord_cartesian(ylim = c(0, 5)) +

theme_classic() +

labs(x = "Review Stars", y = "GPT Sentiment Score (x5)", title = "GPT vs. Google Reviews")